Introducing the Measurements extension for MuseIDE

The Measurements extension is a free (and open-source) project extension for MuseIDE and the Muse Test Framework that adds evaluation of performance criteria to a test. This initial release adds two new capabilities:

- Collect and store the durations of steps in the test, for later analysis.

- Compare the duration of steps to performance goals and record a test failure when the goal is exceeded.

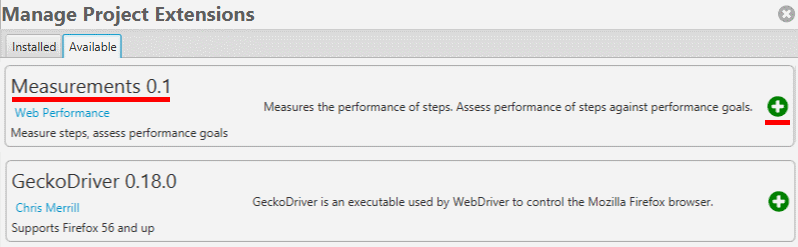

The extension is available for installation directly within the MuseIDE: after opening your project, go to Extensions and switch to the Available tab. The Measurement extension can be installed into your project with the click of a button:

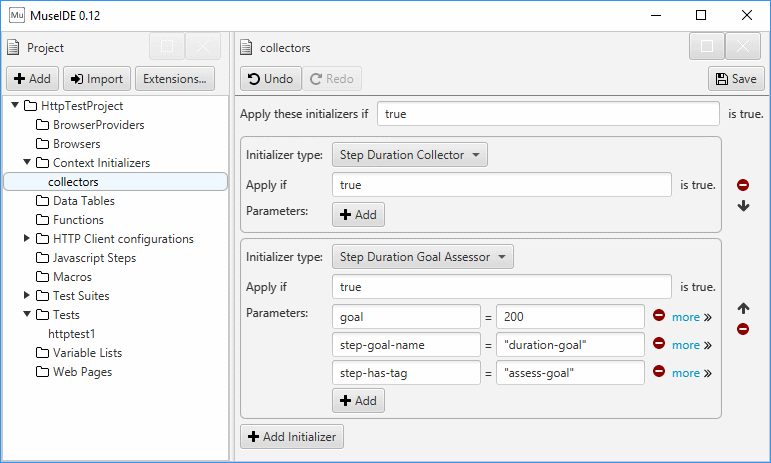

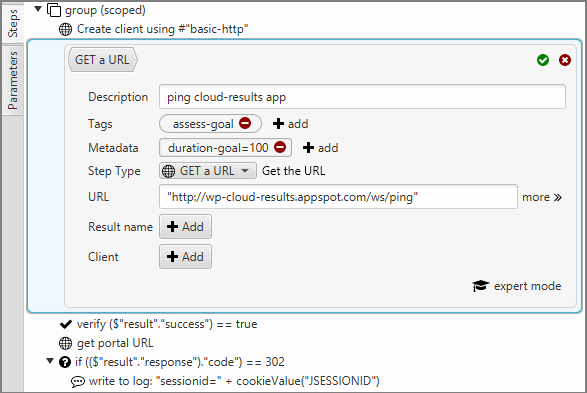

Each of the new capabilties is implemented as a context initializer, which means that to make use of it, you must add it to a context initializer group. Here is an example with both features in use:

Step Duration Collector

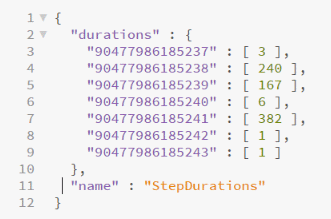

In the case of the Step Duration Collector, that is all there is to do. Once added to an initializer group, it will collect the duration of each step in the test. When the test is complete and the output is stored, it will appear in a file named StepDurations.json and the contents will be in this format:

This output is intended to be used by other tools for analysis (which could include transforming it to a more human-readable format). Each of the durations is identified by the Step Id – which references a step in the test. Since a step could execute multiple times in a test (e.g. looping), each step has a list of durations recorded.

Note that storing the output of a test happens when a test is run from the command-line with the output option. For example

muse run test1 -o out-folder

Step Duration Goal Assessor

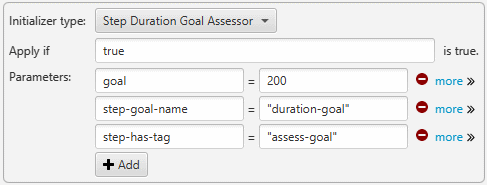

This feature has a few parameters that you must provide before it can be useful. Looking more closely at the above example:

The parameters are:

- goal (required) specifies the default goal against which performance will be measured. It is scaled in milliseconds (200ms in the above example)

- step-has-tag (optional) helps filter which steps should be evaluated. The value supplied for this parameter is a tag that you will add to the steps that should be assessed. Steps without this tag will be ignored. While technically optional, in practice this is always needed.

- step-goal-name (optional) allows a different goal (than the default) for a step. It is used to specify the name of an attribute on a step that will determine the performance goal for that step. If an attribute with this name is present on the step, it will be interpreted as an integer in milliseconds.

Look at the tags and metadata attributes on this step. When used with the configuration shown above, goals will be assessed on this step because it has the assess-goal tag. The goal will be 100ms, instead of the default 200ms goal, because it has a duration-goal attribute.

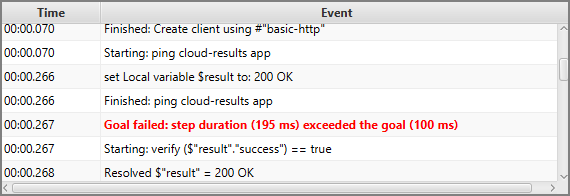

When run in the IDE, failure of the performance goal will generate an event that looks like this:

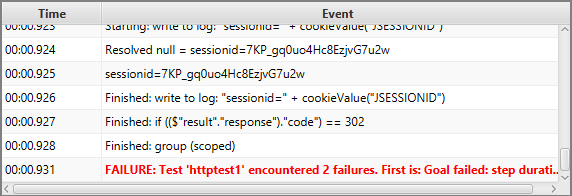

The test will fail due to the goal failure event:

If you want to dig into the source, it can be found on GitHub.

As always, please contact us with any questions!

Chris