The Fastest Webserver?

Looking for the snappiest, fastest web server software available on this here internet? So were we. Valid, independent, non-synthetic benchmarks can be difficult to find. Of course, we all know that benchmarks don’t tell us everything we need to know about real-world performance; but what’s the fun of having choices if we can’t pick the best?

Exactly. I decided to do a little research project of my own.

Test Plan

I selected for this exercise recent (as of October 2011) versions of Apache, Nginx, Lighttpd, G-WAN, and IIS — a list that includes the most popular web servers as well as web servers that came recommended for their ability to rapidly serve static content. The test machine was a modern quad-core workstation running CentOS 6.0. For the IIS tests I booted the same machine off of a different hard drive running Windows Server 2008 SP2.

Web Performance Consulting

Each web server was left in its default configuration to the greatest extent practical — I was willing, for example, to increase Apache’s default connection limit, and to configure Lighttpd to use all four processor cores available on the test machine. I’m willing to revisit any of these tests if I discover that there is a commonly used optimization that for some reason is disabled by default, but these are all mature software packages that should perform well out of the box.

I decided to run two suites of tests, named “realistic” and “benchmark.” The “realistic” test represents a web server delivering a lightweight website with static HTML, images, javascript, and css. The “benchmark” test represents an unlikely scenario involving numerous files of roughly 1 kilobyte. The realistic test is intended to provide a performance profile for a web server under ordinary usage conditions, while the benchmark test is designed to stress the server software’s ability to rapidly write responses to the network card.

The results in this article describe the behavior of heavily trafficked web servers delivering static content. I believe that performance metrics of this nature can be useful for understanding the behavior of systems under load, but we should remember that real-world systems are complex and that performance can be constrained by many variables other than the efficiency of any particular software package. In particular, these numbers will not necessarily be meaningful for systems that will be performing functions related to dynamic content, such as maintaining session state or performing searches.

The “Realistic” Test

For the realistic test, I downloaded a snapshot of Google’s front landing page and converted it into a very small static site. I choose this page because it represents a real, minimalistic page with a variety of resource sizes and types. Very few organizations offer a slimmer first impression.

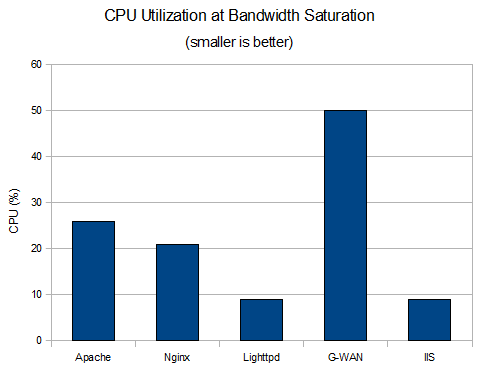

Apache, Nginx, Lighttpd, G-WAN and IIS all comfortably saturated the test network at roughly 930 megabits per second (after TCP overhead), but some variation in efficiency at near-saturation is still apparent:

A lower number here presumably implies reduced hardware and energy costs, and also represents excess capacity that presumably could be used for other tasks.

(G-WAN, despite proving a snappy little web server in the benchmark test, eagerly consumed 50% of available CPU resources almost immediately upon receiving requests. As nearly as I could tell given the time I had available, G-WAN uses a different process model from other server software and CPU utilization is not necessarily proportional to load.)

The “Benchmark” Test

For the benchmark test, I decreased the size of the resources to one kilobyte and increased the number of resources loaded per connection from 5 to 25. The former action was a deliberate adjustment of a test variable, but the latter was simply an operational necessity: opening and closing TCP connections too rapidly burns a surprising amount of CPU power, tests the operating system’s TCP implementation as much if not more than the web server, and beyond a certain threshold simply isn’t permitted by the client-side OS even with tuning.

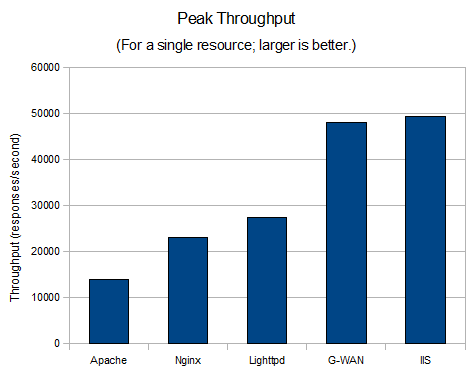

The goal of the benchmark test was to measure the maximum throughput of each server in requests per second. Each test was considered complete when throughput stopped increasing with respect to load.

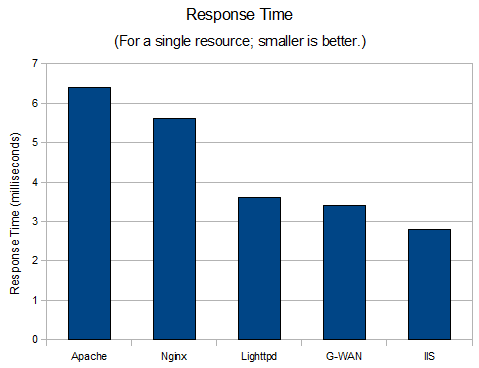

I also monitored average response times; note that these are average round-trips for a single resource, running over 1 or 2 switches (varying due to the use of multiple load generating engines), such that all systems are within about 50 feet of each other. The measurement is a running average taken during a sampling window between the second and third minutes into each test, when the system would be under only modest load (about 500 to 750 requests per second, actual).

A Note About Response Times

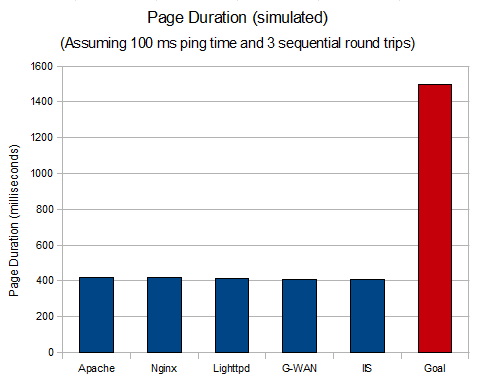

The ability to respond to a request in less than 3 milliseconds is certainly an impressive technological feat. But to put these numbers in perspective, lets look at how they would affect a typical use case. Let’s assume that the user has a 100 millisecond round-trip (ping) time to your server, that we will need at most 3 sequential round trips (which is about right if we’re loading a typical page with 12-18 resources), and also need one round-trip for the SYN/ACK to establish the first TCP connection. Based on these assumptions, I calculated the simulated page load times below. The red bar represents the 1.5 second mark, a page duration goal that Google, in their wisdom, has deemed the dividing line between “fast” and “slow” web pages in their own analysis software.

If you’re interested in round-trip times, how they affect the performance of your system, and how to optimize them away, Google has a comprehensive article on the subject.

Commentary

This data comes with a number of qualifications.

For common use cases with lots of static content, such as a corporate front landing page, a 1 gigabit network card will become saturated long before any other resource. The larger your pages (including all resources, such as CSS and image files) in kilobytes, the more this advice rings true.

Request-per-second numbers matter for systems that serve large numbers of small files. Such systems should be unusual. For example, if you are delivering pages with many small user interface elements, each requiring its own image resource, consider using CSS sprites to combine those resources into one.

As concerns the simulated page durations, moving your servers closer to your end users is the best way to reduce those numbers. This is where content delivery networks make a strong showing.

These measurements don’t describe the behavior of a system populated with many gigabytes of unique files that are accessed with uniform frequency. Such systems can’t keep all content in cache and can be limited by hard disk speed.

Finally, static content delivery is infinitely scalable. No matter how inefficient the system, we can always increase capacity by adding more hardware, and the cost of doing so needs to be weighed carefully against the ease of maintaining an alternative software configuration.

Conclusions

Each test revealed IIS 7 as a clear frontrunner.

IIS administrators may give themselves a big pat on the back and feel free to stop reading now. Our beloved Linux server administrators, however, will need to settle their priorities.

Lightttpd is the platform of choice if you want a lean and fast environment. Its low CPU utilization should help reduce energy and hardware costs, and it’s response times are within a millisecond of the frontrunner.

G-WAN is marketed on it’s ability to push requests per second, and it unquestionably delivers, but based on these tests it may not have the all-around efficiency that would recommend it for general purpose use.

Apache, arguably the most popular software package in the list, yielded the worst results, and Nginx certainly deserves an honorable mention. Oh, but feel free to continue using any web server that suits your organization, as long as it suits you to run something other than . . . the Fastest Webserver.

18 Comments

21 November 2011 benstill

I’m extremely sceptical of these results and how this test was run. In my own experience over several years and many high traffic sites, IIS has been an absolute dog in every respect. Changing to Nginx meant a performance increase of literally thousands of times.

While I understand that your Webperformance software is PC based and so you might have a natural tendency to test on a Windows server. But since the title of your test results are about “fastest webserver” (rather than fastest Windows webserver), perhaps it would be fairer to run these on some flavour of Linux.

I’m one of your customers and have used your software to load test sites. In my opinion it lowers the quality of your otherwise fine product to include statements like “Oh, but feel free to continue using any web server that suits your organization, as long as it suits you to run something other than . . . the Fastest Webserver.”

There are plenty of other benchmark results out there comparing different webservers – perhaps if you re-title this post to be “what might be the fastest Windows webserver” it might be a bit more accurate?

22 November 2011 Michael Czeiszperger

Hi Ben– Thanks for taking the time to comment! There’s lots of ways to measure that something is the fastest, and this is just our take on it. It was supposed to be tongue-in-cheek, as these types of comparisons are a silly but fun exercise. Lane will get back to you with specific answers, but we’re not a Windows shop; in fact, the original version of our software was developed on Linux and the Mac, and even now both the server monitors and load engines run on Linux. The only reason the controller runs on Windows-only is 99.5% of our customers preferred that platform, and it was a painful, but necessary way to reduce development costs.

22 November 2011 lane

Hi Benstill,

I also found the IIS result surprising. Ultimately, I have to do the research and report the results I actually see.

This wasn’t a Windows-only research project. I used removable hard drives to test Linux and Windows web servers on otherwise identical hardware. In fact, I did not originally intend to test IIS or Windows at all — my initial plan included Linux-based web servers only.

If there is a limitation to this research, in my opinion, it is that we (as a practical matter) restricted ourselves to a small amount of static content. This means that many components of IIS were simply turned off — which is the correct configuration for the use case we simulated. It is my hope that we will be able to attempt more ambitious research projects in the future.

There seems to be no reason to believe that IIS is fundamentally inefficient per-se; I would suggest that the discrepancy between your (and my own) experience with under-performing IIS deployments in the field and the results here must be attributed to some other factor.

Thank you for your feedback,

–Lane

29 November 2011 craigvn

The IIS results don’t really surprise me at all. Sites like StackOverflow/StackExchange do 10 million + page views per day on only a few IIS servers and one SQL Server. How well the website is coded is important as well, but in general anyone who thinks the Windows stack doesn’t scale is just kidding themselves.

29 November 2011 Webserver Performance Test | Code-Inside Blog

[…] hat diese Seite einen Test gemacht und der IIS hat “gewonnen”. Allerdings gibt es ja auch ein leuchtendes […]

30 November 2011 Perastikos345

“…saturated the test network at roughly 930 gigabits per second…”

Oops! 930 Gbps?!?!?! The PCI Express 3.0 bus tops out @ 250 Gbps, but your *NIC* reaches 930 Gbps? Are you sure about these numbers?

30 November 2011 lane

Facepalm. That should read “megabits.” Good catch, thank you.

30 November 2011 Hollywood

By far the fastest web server is Caucho’s Resin…. by far. That said I’m currently at a IIS shop, and current IIS is so much improved from the early versions – for sure.

30 November 2011 Daniel15

Nice benchmark, except you didn’t optimise your configuration (who would be running a live environment on the default config?) , nor did you test Cherokee. I think Cherokee would be faster than Nginx.

30 November 2011 jariza

Just two questions: What about dynamic contents? and Why do you use Apache instead of “the Fastest Webserver”?

1 December 2011 chris

> What about dynamic contents?

That is a much harder test. Dynamic content for many/most web apps is not generated by the web server. It is generated by a completely separate set of code, such as a PHP interpreter, .NET or Java VM. That said, it is certainly possible to test. We’ll keep that in mind for a future test.

> Why do you use Apache instead of “the Fastest Webserver”?

Nobody here wants to administer a Windows server :>

On a more serious note, while it is fun to proclaim one server faster than the others and then sit back and watch the resulting fireworks, the last chart in the report puts the results in perspective. The difference in single-transaction response time is small compared to the total load time for a page. This difference will quickly be swamped by latency in real-world networks. If you are browsing from the opposite coast, the statistical deviation will likely be higher than the difference between these servers. So to answer your question, it won’t make a measurable difference so we use the server that has the features we need.

1 December 2011 [Software] Comparazione Webservers

[…] […]

7 December 2011 ComputerGuy

Try enabling caching on Apache:

http://httpd.apache.org/docs/2.2/caching.html

http://httpd.apache.org/docs/2.2/mod/mod_cache.html

http://httpd.apache.org/docs/2.2/mod/mod_mem_cache.html

ADMIN’S EDIT: fix broken links.

7 December 2011 lane

Hi ComputerGuy,

We discussed caching on Apache while developing this article. There are some reasons why I didn’t explore that option.

The documentation you listed doesn’t strongly recommend caching for the use cases we ran. This is because caching at the operating system / file system level is enough to make sure that all resources are kept in memory.

I don’t strictly discount the possibility that caching could in some marginal way improve Apache’s performance. However, I’m convinced that Apache’s performance bottlenecks are explained by its heavyweight process model, which conflates parallelism with concurrency leading to unnecessary resource utilization. Quite simply, it is not efficient to maintain a 1:1 mapping of TCP connections with heavyweight operating system threads.

I would like to spend some time developing benchmarks for various Apache configuration options, but a proper exploration of these options would go well beyond the scope of the article above.

Thanks,

–Lane

8 December 2011 ComputerGuy

http://www.webperformance.com/load-testing-tools/blog/2011/11/what-is-the-fastest-webserver/

“…I’m willing to revisit any of these tests if I discover that there is a commonly used optimization that for some reason is disabled by default…”

http://httpd.apache.org/docs/2.2/caching.html

“This document supplements the mod_cache, mod_disk_cache, mod_mem_cache, mod_file_cache and htcacheclean reference documentation. It describes how to use Apache’s caching features to accelerate web and proxy serving, while avoiding common problems and misconfigurations.

…”

http://www.serverwatch.com/tutorials/article.php/3436911/Apache-Server-Performance-Optimization.htm

“…

In most situations, but particularly with static sites, the amount of RAM is a critical factor because it will affect how much information Apache can cache. The more information that can be cached, the less Apache has to rely on the comparatively slow process of opening and reading from a file on disk. If the site relies mostly on static files, consider using the mod_cache; if plenty of RAM is available, consider mod_mem_cache.

…”

http://www.hosting.com/support/linux-cloud-vps/performance-tuning-apache-modules

“…

By enabling the mod_cache directive, we can configure Apache to save content to the disk, memory, or a cache file to decrease load times. Enabling server-side caching on server that handles a significant amount of traffic can dramatically decrease load times. …”

ADMIN’S EDIT: fix broken links.

24 May 2013 10 Great Web Performance Articles from 2011 » LoadStorm LoadStorm

[…] the Windows side of the equation, the folks at WebPerformance caused a stir among their readers when they ran a benchmark testing Apache, Nginx, Lighttpd, and G-WAN on a CentOS 6.0 quad-core […]

10 November 2014 IIS vs Apache | Marcus Vinicius dos Reis

[…] http://www.webperformance.com/load-testing-tools/blog/2011/11/what-is-the-fastest-webserver/ […]

18 February 2017 Software Not Ready For Users Until Load Tested - All Round Community

[…] under load. This is important because there’s a big difference between how fast web servers perform under load compared to the time when they are not under […]