Velocity Web Performance Conference 2010 Day One

Last week I was in San Jose at Oreilly’s Velocity Conference, the only conference solely dedicated to the subject of website performance and testing.

The first talk of the day was Metrics 101: What to Measure on Your Website, given by Sean Power, one of the authors of Complete Web Monitoring. He is an excellent speaker, and the talk was full of good information. These are my thoughts on the subjects he brought up, but includes quite a bit of my own extrapolations from his thoughts, and will try to make it clear which are which.

Sean’s first useful observation was that the metrics you collect should change depending on the business model. Even a single website may have sections with different business models, so its critical to understand the business models when writing a load testing or web performance plan. He implied the way to get useful information out of web metrics is to look for correlations between raw metrics, performance, and business goals.

On the web performance monitoring side of things he divided the approaches into two: virtual user monitors and “real user” monitors. The term “virtual” has the same meaning as in load testing, but refers to simulated users coming from various sites around the world one at a time to measure web page load times at the same time the site is under load from real users. This is similar to the virtual users generated during a load test, the differences being that virtual monitoring users only run one at a time periodically around the clock.

He contrasted virtual user monitors with a new type of web performance monitor that uses packet sniffer technology to measure the performance seen by real human users. The Real User monitoring technology doesn’t need scripts to be developed, and can save all of the web traffic that hits a server, including much more information that’s in web logs so you have a complete picture of what users were doing when a problem occurs. He said that bypassing the test development process means it will work regardless of the browser technology that’s being used, and so has no issues working with Flex, GWT, or any of the harder to simulate browser protocols that can be difficult, but not impossible to support in virtual user test case-based monitoring services.

Another advantage of this approach is its theoretically possible to recreate all of the inputs to a website that created a crash or some other problem. After thinking about this for a while I realized that to do this successfully for any site with a database means the database would have to have been backed up frequently, and the entire site rewound to a point before the problem occurred which is quite a bit of effort. Since the production site needs to keep going this would require a near-carbon copy backup test system that mirrors production, something that not many companies have in place. Simply replaying all of the HTTP requests at a website wouldn’t work in any event since the web server would respond with different session tracking variables, so there’s lots of technical details that would have to be worked out before one could replay exactly what happened to a web server. In this regard load testing still has the advantage of being able to create realistic load conditions, find problems, and then repeat the test exactly to make sure they’re fixed.

In my opinion the other advantage or disadvantage depending on your point of view is the lack of business goals. For example, if the business goal of a site is to generate leads, a virtual user based system can create test cases for one or more representative funnels that give an indication of the performance of the typical customer is experiencing. With a Real User monitoring system there are no test cases, and so while you do get a record of the circuitous path every user takes, that would obfuscate the business goals measurements. Say, for example, a user decides to check out stock prices or go to the bathroom while in the middle of filling out the forms for purchasing a product online. The timing from when the user starts to purchase shoes until they complete the checkout is not representative of the business goals of the typical customer who did not interrupt the purchase process. While its useful to know how many delays and diversions customers take during the sales funnel, the information from that is not actionable. From a performance management point of view, one is interested in tuning the checkout process to be as fast as possible regardless of random user behavior. Not only that, but old-style web analytics tools are already oriented towards tracking real-user behavior to look for patterns with an eye towards increasing sales.

According to Sean another problem with real user monitoring is it needs real users to work, and thus has no way of telling the difference between a site that simply isn’t being used and one that’s broken. A virtual user based monitoring system on the other hand can detect if your site stops and send out an alarm so the problem can be fixed before customers detect the problem. Real User monitors wouldn’t be able to detect a problem until an actual user sees it, and even then it would have trouble detecting the same problems that a virtual user page validator would spot easily. And then there’s the privacy and security issues. Because literally everything a user does is stored outside of normal company database, usernames, passwords, credit card numbers, social security numbers, names, addresses: everything is stored in a 3rd party box that is another target for identity thieves.

As a result the Real User monitoring technology is something interesting to consider as a complementary set of information to that you already collect from your existing virtual user performance monitoring and web analytics services. Companies at the conference in this space were Cordiant, TrueSetting, Omniture, and Tealeaf.

Changing the Web Metrics Metaphor

With all of the newer web technologies including AJAX, GWT, Flex, and even iFrames to some extent the web-page metaphor for thinking about a web site is breaking down. Instead of one static page followed by another there are streams of data, and Sean implored tools and testers to update the way they think about testing to make sense of the new web designs. One example of these we encounter all of the time is AJAX-based sites that fresh part of the page during a user interaction. The web page load time is the time for the page to come up the first time– if the user changes the graph time period while looking at stocks, causing a data refresh, this could generate a flurry of HTTP traffic quite separate from the original page, and this needs to be timed separately. With Load Tester, we handle this situation by using “burst grouping”, which looks for activities such as in the above example and automatically groups them together and collects status on not only the entire burst, but on each individual call as well.

Use Percentiles Instead of Averages

Sean gave a great example showing at averages are not useful web metrics: if there are 4 people in the room and the average age is 26, you could have on 90 year old and 3 kindergarteners. Instead he urged the use of percentiles, which is something that Load Tester supports as well. Rather than being complicated math, it allows you to speak in english like “80% of our users saw load times in 2 seconds or less.”. In my own experience percentiles help in this regard, but can mask serious performance issues. In the last example, what if the other 20% made the back-end crash?

Write an SLA for your own Website

Sean brought up a problem I see all the time with Web Performance customers, and that’s how to express their performance goals. Each company is different, and so something simple like “all web pages must load in 2 seconds or less” doesn’t give enough detail to be useful. Check the slides for his example.

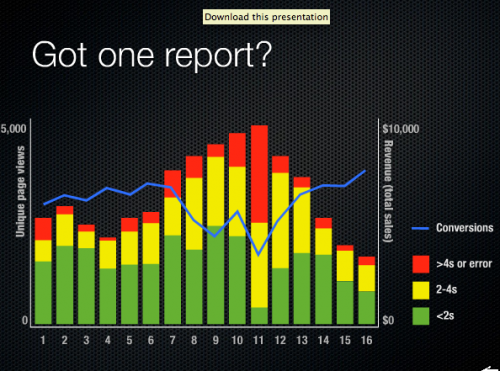

The Web Metrics 101 Chart

Sean presented what he said was the most basic web performance metrics chart, which was a bit more complicated than that in my opinion, but quite useful none-the-less. It overlaid three metrics at the same time, web page load times, overall traffic, and business logic, the goal being to get an idea of how much traffic the site was seeing and this correlates to web page performance and business goals.

Load vs. Conversions vs. Performance

Michael Czeiszperger

Founder, Web Performance, Inc.