Measuring the Performance Effects of Dynamic Compression in IIS 7.0

Load Testing IIS Dynamic Compression

Matt Drew

©2009 Web Performance, Inc.

v1.0 - Jan 9, 2009

Summary

Enabling dynamic compression in IIS 7.0 can reduce the bandwidth usage on a particular file by up to 70%, but also reduces the maximum load a server can handle and may actually reduce site performance if the site compresses large dynamic files.

Overview

This article examines the trade-off in CPU utilization versus bandwidth utilization when compressing content with dynamic compression in IIS 7.0. The test uses a set of static test files of in a range of sizes to simulate total page size, and measures server CPU utilization and bandwidth utilization across various traffic levels.

Methodology

The analysis is based on a series of load tests peformed in our test lab. We tested five sample file sizes, and ran the same test on each file twice - once without compression, and once with compression. In each case, we measured the bandwidth necessary to serve the load, CPU utilization, and hits per second. Since we are testing dynamic compression, all content is compressed on the fly and uncached. No dynamic content is used to avoid CPU utilization by the content generation scripts; however, since no content is cached, this effectively simulates large amounts of unique dynamic content. The target webserver, IIS 7.0 on Windows 2008, was rebooted between each test. Note that the IIS 7.0 default zlib level for dynamic compression was changed to allow for comparison to our previous Apache test. For more details, see Appendix A.

Analysis

Below are the results for 10KB, 50KB, and 100KB file sizes. The dotted lines are with compression turned off, and the solid lines are with compression turned on.

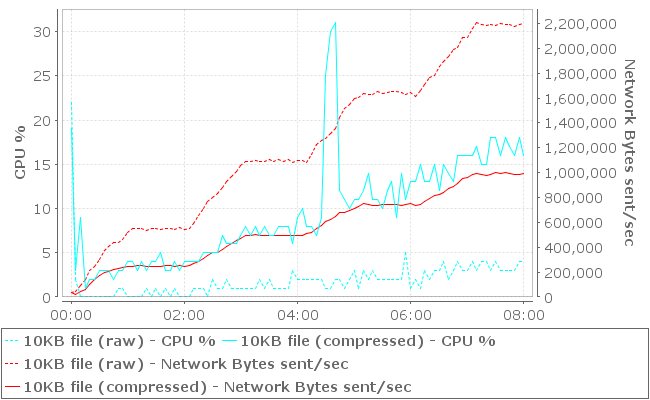

10KB files

The chart below shows the impact of compression on CPU utilization and bandwidth consumption while serving 10KB files. At each load level, turning on compression decreased bandwidth consumption by ~55% and increased CPU load by a factor of ~4x compared to the uncompressed equivalent.

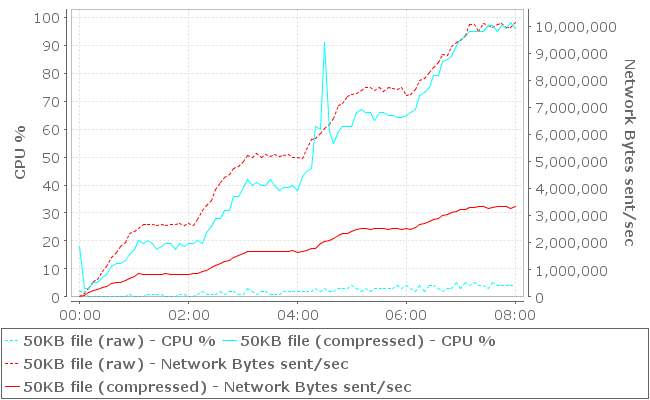

50KB files

The chart below shows the impact of compression on CPU utilization and bandwidth consumption while serving 50KB files. At each load level, turning on compression decreased bandwidth consumption by ~67% and increased CPU load by a factor of ~20x compared to the uncompressed equivalent.

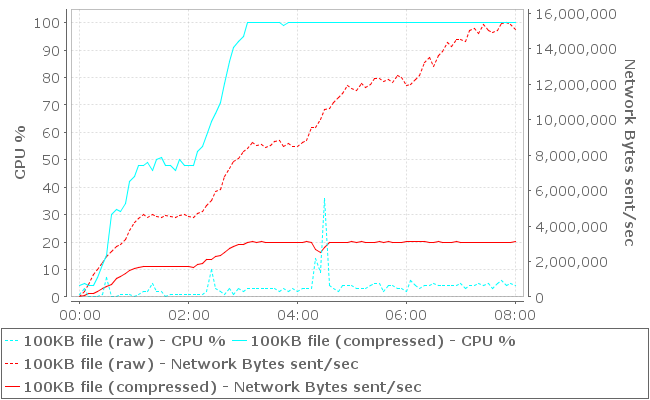

100KB files

The chart below shows the impact of compression on the CPU utilization and bandwidth consumption while serving 100KB files. At this high data rate, the CPU was overwhelmed during the second ramp-up. At the first load level, turning on compression decreased bandwidth consumption by ~64% and increased CPU load by a factor of ~30x compared to the uncompressed equivalent (until the CPU becomes fully utilized and comparisons are no longer meaningful).

As expected, the results are very similar to our previous Apache test, since both webservers use the same gzip algorithms to compress their content. IIS does use slightly less CPU to perform its compression. This is likely due to IIS 7.0's thread-based model versus Apache's default process-based model; we'll be examining thread-based Apache MPMs for comparison in a future test.

We have been unable to determine the cause of the CPU usage spikes shown in several of the graphs. The spikes do not occur on a quiescent system; they only appear when the webserver is actively serving content.

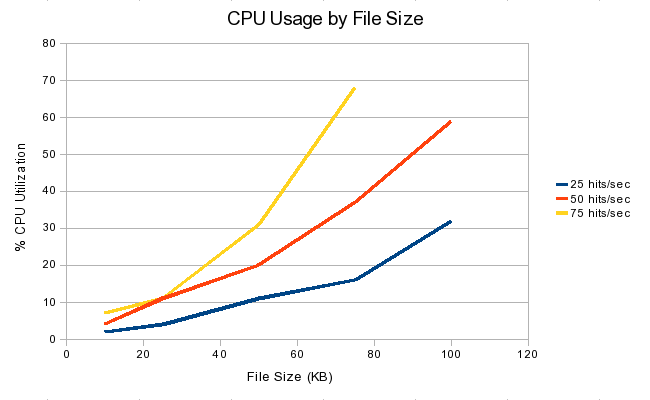

Non-Linearity of CPU Usage

Since gzip is a block compression algorithm, one might expect that the amount of CPU usage versus the data rate would show a roughly linear increase. However, the measured increase in CPU utilization appears to be non-linear as file size increases; further, the slope of the curve steepens across both load and file size.

There are a number of possible factors that could influence this data. One, of course, is CPU contention and scheduling. We cannot directly compare the compression of a single large file to simultaneous compressions of many smaller files due to the operating system overhead of scheduling and switching between threads and/or processes. Such overhead would add CPU utilization as load increased. Due to IIS's multi-threaded design, however, such overhead is inescapable, so it must be factored into real-world performance analysis.

The gzip algorithm is also sensitive to data type and structure, so it is possible that our data was significantly more difficult to compress in the larger files. The design of the test cases should prevent that from being a factor; the book data is as uniform as possible without being repetitive across the various file sizes (see Appendix A for details). Nonetheless, this remains a possibility.

Is It Worth It?

What are we getting for the use of these CPU resources? The gzip algorithm is known to be fast and efficient on text files, and indeed we see total bandwidth reductions of 60-70% on pure compressible content such as in our test. Clearly this has a sizable and easily quantifiable impact on the cost of the bandwidth to service the site.

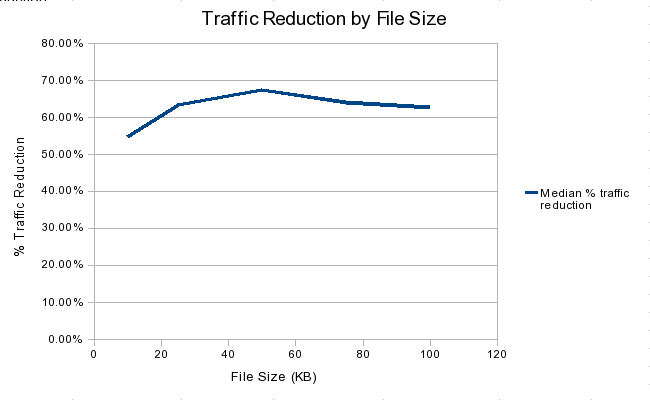

Interestingly, if we measure the percentage of traffic reduction by file size, we find that the dynamic compression performance was not uniform; there is an apparent optimal efficiency around the 50KB file size.

This graph is similar to the graph in our Apache test, with a peak in efficiency around 50KB. The same factors apply: gzip could be sensitive to the data itself, or it could be related to smaller files having more network overhead. We'll explore this in a future report.

Conclusion

The advantages of compression are lower bandwidth usage and faster data transfer for large pages, which should result in a better user experience, as noted in our previous Apache report.

We recommend that dynamic websites that are already CPU limited should be cautious when enabling compression, particularly where it could affect large dynamic files. The trend in the data is clear: sites that are compressing large dynamic content will be expending a significant amount of CPU on compression while under load. This CPU usage does not scale well and is almost certain to delay page generation, eliminating the advantages of compression on the user experience, but not the impacts on bandwidth. Such a site could easily end up in a situation where the perceived performance of the site under load is considerably worse than without compression. Conversely, more CPU power (or servers) must be deployed to support a given load and perceived performance level for a CPU-limited application where reduction of bandwidth usage is a priority. Using a hardware-based compression device, as is found on some load-balancers, would also be an option to assist with compression performance.

The traditional software solution for decreasing the CPU load from compression is to cache the result and serve that instead, as long as the file doesn't change. However, for modern websites, at least a portion of the content is dynamic and must be compressed on the fly if it is to be compressed at all. Thus there is a clear need to cleanly separate dynamic content from static content; to minimize the size of dynamic content as much as possible; and to be cautious when enabling compression in situations where the dynamic content is large. IIS 7.0 uses static file caching by default, and when possible caches dynamic content; we will explore these capabilities in a future report.

It should be noted that Microsoft's defaults for compression are zlib level 7 for static files, and zlib level 0 for dynamic content. This results in a significantly different CPU-to-bandwidth comparison profile for dynamic content; CPU usage is significantly lower, and bandwidth usage is significantly higher. IIS 7.0 also employs CPU usage monitoring to disable compression when the CPU usage reaches the configured threshold, and re-enable compression when CPU usage drops below a separately-defined lower threshold. This CPU management was disabled during our test; however, in real-world usage it should be enabled to take advantage of this feature. We will also explore this feature in a future report.

These features and design choices indicate that there is a growing need for more precise control over compression settings and for an expanded choice of compression levels, models, and designs. There may even be a place for dynamically-controlled compression based on overall CPU usage, specific processor core dedication to compression, CPU usage targets, bandwidth targets, and perhaps even data analysis of files to determine the optimum compression level for each file or type of file.

Appendix A:Methodology

Software Versions and Settings

- gzip.dll - File version: 7.0.6001.18000, Product version: 7.0.6001.18000

- w3wp.exe – File version: 7.0.6001.18000, Product version: 7.0.6000.16386

- system.webserver.caching: enabled=“false”

- system.webserver.caching: enabledKernelCache=”false”

- system.webserver.httpCompression: dynamicCompressionDisableCpuUsage=”100”

- system.webserver.httpCompression.scheme: dynamicCompressionLevel=”6”

- system.webserver.urlCompression: doDynamicCompression=”true”

The toggle used to control whether or not compression was enabled was system.webserver.urlCompression doDynamicCompression, with “false” representing no compression and “true” representing compression. The dynamic compression level was modified from the default zlib level 0 to zlib level 6 in order to allow for direct comparison with our previous Apache test. The main cache and the kernel cache had to be disabled because caching occurred even with files designated as dynamic. The dynamicCompressionDisableCpuUsage option was set to “100” to avoid dynamic compression being disabled during the test by the CPU monitor. The applicationHost.config file is available in Appendix B. All other configuration was the default.

The load testing software was Web Performance Load Tester version 3.5.6556, with the Server Agent of the same version installed on the test web server.

Hardware and OS

The target IIS 7.0 web server was running on a Dell PowerEdge SC1420 with a 2.8GHz Xeon, family 15, model 4 – Pentium 4 architecture (Gallatin) with hyperthreading on, 1MB of L2 cache, 1GB of RAM, and an 800 MHz system bus. The target server was running Windows 2008 Standard Server (32-bit), default installation, updated to the current Microsoft patch level as of 10 December 2008.

Three of the load-generating engines were each running on a Dell Poweredge 300 with 2 x 800MHz Pentium III processors and 1GB of RAM. The fourth load-generating engine was running on a Pentium 4 2.4GHz with 2.25GB of RAM. Each engine was running the Web Performance Load Engine 3.5 Boot Disk version 3.5.6556.

The engines and server were networked via a Dell 2716 1-Gigabit PowerConnect switch. The server was running at 1Gb and the load engines were each running at 100Mb.

The Load Tester GUI was running on Windows XP SP3, on a Dell Dimension DIM3000, Pentium 4 2.8Ghz with 1GB of RAM. This machine was connected to a 100Mb port on a second switch. This had no impact on the results, since the bandwidth requirements are small, but is mentioned here for completeness.

Test cases

The test cases chosen were five HTML files composed primarily of text, cut using the "dd" tool to 10KB, 25KB, 50KB, 75KB, and 100KB from the 336KB source file (an HTML version of Cory Doctorow's Down and Out in the Magic Kingdom). The load engines did not uncompress or parse this file in any way. There is a delay of one second between test cases. A book was chosen because the content was relatively homogenous across the entire file without being repetitive, as repetition of large amounts of data makes compression significantly easier.

These test cases are intended to simulate the compression of dynamically generated web pages where such pages are customized on an individual basis such that caching has little to no effect. It also represents static files that are repeatedly compressed without being cached, such as javascript or CSS files that are used on a homepage or across multiple pages, or a single static page that experiences a surge in web traffic.

We used static HTML files to avoid any CPU usage related to dynamic generation of the file, so that the impact of the compression could be clearly seen and not confused with other web server activity; a dynamically generated HTML page would add CPU usage to the load based on how difficult it was to generate. In short, these test cases represent the best-case bandwidth scenario, with 100% highly compressible content. They also represent a worst-case CPU scenario, with all content being compressed on the fly and no caching available to prevent repetitive compression. It is likely that a real-world website will have a lower CPU utilization due to some requested files not being run through the compression. This would also, however, reduce the bandwidth gains, since those files are often images or other static files that are already compressed and thus would use the same amount of bandwidth in either scenario.

The test data repository file is available for examination - the demo version of Load Tester can view the test cases, load configurations, detailed raw metrics, and the test reports. Test reports are also available in HTML, see Appendix C.

Load Configuration

- Five test cases as described above.

- Each test case is run independently, with the target web server being rebooted between each test.

- For each test case, there are two tests: a baseline without compression, and a test with compression enabled.

- Every VU (virtual user) runs the same test case, with a 1-second delay in between.

- Each VU is simulating a 5Mbps connection (e.g. cable/DSL connection).

- Each test is 8 minutes in length, starting with 50 users and increasing the load by 50 users every two minutes at random intervals inside that minute, to a maximum load of 200 users.

- 5 second sample period.

- The test parameters were determined through a number of preliminary tests that gauged the performance that each load engine was capable of, and to make sure that consumed bandwidth was not high enough to impact the test.

Test Procedure

Each test run followed these steps:- Turn compression in the IIS configuration on or off, depending on the test

- Restart the server

- Start the server monitoring agent

- Run the load test

Appendix B: IIS 7.0 Configuration

ApplicationHost.configAppendix C: References

Full Test Reports

| 10KB | clean | compressed |

| 50KB | clean | compressed |

| 75KB | clean | compressed |

| 100KB | clean | compressed |

Feedback & Comments

Comments about this report may be posted at the company blog post.

Version History

v1.0 - 1st public release